FB LAB ~Electronics Laboratory ~

Future operation system with the AI Communication Box

The operator says “Change operating band to 7 MHz.” into a microphone. The radio operating band was changed to 7 MHz without pushing or touching any controls on the radio front panel. An interface unit detects the voice message and the unit converts the voice audio into commands, and the commands control the radio. That is cool.

We found a unique system and concept products that were displayed, and demonstrated as a future operating style in the Icom booth at Ham Fair 2018 in Tokyo. One is called “AI Communication Box” and is a stand-alone type. One is called “HF Transceiver system powered by Alexa” and is a Cloud type used with “Amazon Echo.” Each unit is connected to an Icom radio. Each system has an interface unit for converting voice audio into the Icom CI-V (Communication Interface-V) commands. The commands control the radio.

Many visitors in the Ham Fair should expect to see new Icom products but they stopped by the AI Communication Box corner to see the near future communication system. The FB NEWS Editorial staff visited the Icom Narayama Laboratory to know more about the system. Described below is the report of our visit.

Icom Narayama Laboratory located in Japan’s Nara prefecture.

● Stand-alone type Icom AI speaker “AI Communication Box”

The AI Communication Box has a Julius© speech recognition engine and MMDAgent© speech synthesizer software installed in its box as an interface unit. Many vocabulary words and basic language grammar are preprogrammed into the speech recognition engine. When you speak into the microphone and the engine detects your speech, the unit sends the CI-V commands corresponding to each word or sentence to your radio to control it.

AI Communication Box connected to the IC-7610 through USB (CI-V).

We interviewed the researcher in charge the AI Communication Box for about the development difficulties. He answered that “The most difficult things in this development is to get the proper microphone sensitivity.” First of all, we installed the microphone element into the AI Communication Box. As not enough level of voice reached the microphone element, the system did not work properly. We tested it with variety of microphones and finally we unfortunately decided to connect a microphone externally. As the prototype AI Communication Box did not act fast enough to the input of voice, we did not know whether or not the system recognized the input of voice. We came up with a small idea to know if the system recognized the voice. The idea was to use a 3-color LED.” If the LED lights immediately after speaking, we know that the system recognized the voice.

The FB NEWS editorial staff also asked them about the accuracy of the voice recognition. They told us that “Accuracy of the voice recognition is approximately 90%, but this is when we tested it in a quiet place. This time, we showed and demonstrated it in a very noisy environment in the Ham Fair event hall. The accuracy of the recognition was not as good as when we tested in the quiet place. To increase the recognition accuracy is one of our challenges for the future. We want to register more CI-V commands to control radios, and we want to control radios through Bluetooth equipment.”

■ Operation with the AI Communication Box

Features of the stand-alone type are shown below.

- You can control radios without having a network environment.

- No virus protection is necessary.

- Many vocabulary words can be registered into the recognition engine to recognize speech.

- Many vocabulary words can be preprogrammed to output as speech with an internal speech synthesizer.

The development staff members got many questions from visitors at the Ham Fair. Questions were, for example, when will it be sold, how much will it cost, can the system recognize local accents, and so on. They felt that visitors were interested in the system.

● Cloud type AI speaker using the Amazon Echo.

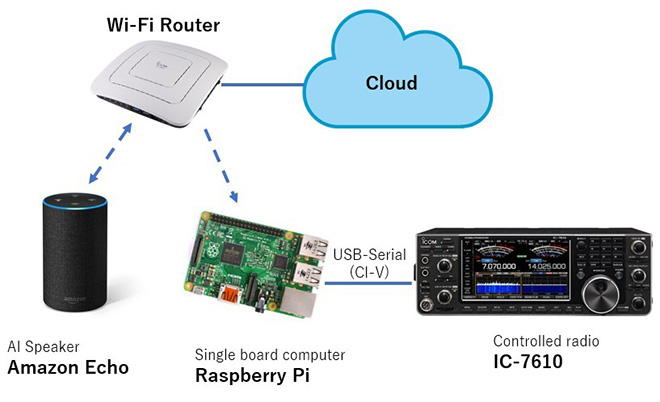

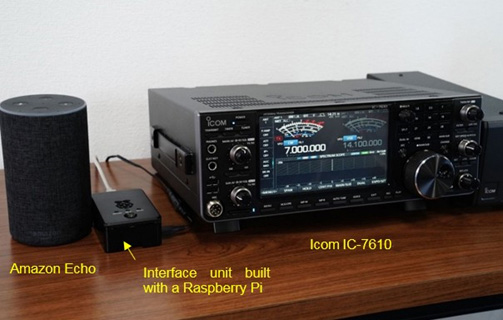

The system functions with the Amazon Echo, a Wi-Fi router, and a Raspberry Pi, as shown in the diagram below. When you speak to the Cloud service of Amazon Alexa through Amazon Echo, the service recognizes your speech vocabulary. A designated server (Socket IO) recognizes the CI-V commands, and the Raspberry Pi controls the radio with those commands.

The FB NEWS editorial staff also interviewed the researcher who developed the Cloud type AI Communication Box. The researcher told us that to make the fixed IP address contract unnecessary and a port forwarding unnecessary, he introduced a Socket IO between the designated server and the Raspberry Pi. Unlike the stand-alone type, speech recognition relies on the Cloud style Amazon Alexa, so it is difficult to independently develop and adjust. The most difficult thing is matching between a dedicated server and a computer (Raspberry Pi). When you turn on the computer, it automatically handshakes with the dedicated server, and after completion it will be able to transmit data at any timing from the server, but he had a hard time working on coordinating this bidirectional communication.

We also asked about current issues and future development.

"The problem at the moment is that the command may become longer compared to the stand-alone type. With the stand-alone type, you can change the frequency with your voice commands, such as "Change frequency" → "Please set value" → "7 MHz." With the Cloud type, you say the directive "Alexa, Set the frequency to 7 MHz.” I would like to make the directive a little shorter, even in the Cloud type in the future. Also, since it is sent through the Internet, there will inevitably be a delay, so it is one of the future tasks to improve communication efficiency."

Example of the system connections

■ Operation with the AI Communication Box

These are the good points for the Cloud type box.

- You can control radios through your smart phone instead of the Amazon Echo.

- Voice recognition accuracy is higher than for the stand-alone type.

- No voice recognition engine or speech synthesizer is needed.

When asking a researcher more about future development, he said "I do not think that we will make it as a commercial product, but I would like to continue doing such research in the future. I hope to be able to present something new, even at next year's Ham Fair. Please expect it."

Left: AI communication Box with a new microphone

Right: The AI Communication Box that was displayed at the Ham Fair 2018.

[Speech recognition]

・Large Vocabulary Continuous Speech Recognition Engine Julius

Copyright (c) 1991 Kawahara Lab., Kyoto University

Copyright (c) 1997 Information-technology Promotion Agency, Japan

Copyright (c) 2000 Shikano Lab., Nara Institute of Science and Technology

Copyright (c) 2005 Julius project team, Nagoya Institute of Technology

・Julius Dictation Kit

Copyright (c) 1991 Kawahara Lab., Kyoto University

Copyright (c) 2005 Lee Lab., Nagoya Institute of Technology

[Speech synthesis]

・「The Toolkit for Building Voice Interaction Systems "MMDAgent"」

Copyright (c) 2009 Nagoya Institute of Technology Department of Computer Science

・「MMDAgent HTS Voice "Mei"」

![]()